在墨天轮中国数据库流行度排行榜上(PS:前段时间还是叫国产数据库流行度排行榜,看来现在已按照上月国产数据库掌门人论坛做了调整),TiDB 数据库一直以来霸榜第一,在线事务与在线分析处理 (Hybrid Transactional and Analytical Processing, HTAP) 功能是它主要的特点。去年七月份使用 tiup playground 模拟出了一个集群环境,体验了一些功能,考取了 PCTA 认证,现在 TiDB 发展到啥样了呢?我们来看看吧,体验一下!

TiDB 架构

1、TiDB 体系架构

TiDB 数据库架构

2、PD 整体架构

3、TiDB Server 整体架构

4、TiKV 整体架构

整体架构就如上图所示,如果要部署这么一套生产环境需要达到如下硬件要求。

单机 TiDB 搭建

如果我们想要搭建如上一套环境的话,个人笔记本还是有点困难的,不过现在官方发布了一款可单机搭建出一整套环境的方法,那么,我们来试试吧,首先需要准备一台可以上网的 Linux 或者 Mac 系统。

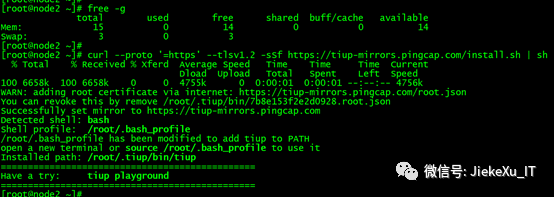

1、使用 curl下载安装 TiUP:

curl --proto '=https' --tlsv1.2 -sSfhttps://tiup-mirrors.pingcap.com/install.sh | sh

2、使其环境变量生效

source /root/.bash_profile

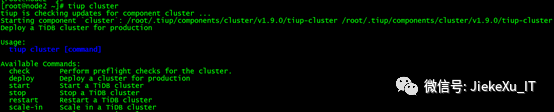

3、安装 TiUP 的 cluster 组件:

tiup cluster

4、如果机器已经安装 TiUP cluster,需要更新软件版本:

tiup update --self && tiup update cluster

5、修改 sshd 连接数限制

由于模拟多机部署,需要通过 root 用户调大 sshd 服务的连接数限制:

-

修改 /etc/ssh/sshd_config 将 MaxSessions 调至 20。

-

重启 sshd 服务:

service sshd restart

[root@node2 ~]# grep MaxSessions /etc/ssh/sshd_config

MaxSessions 20

6、创建并启动集群

按下面的配置模板,编辑配置文件,命名为 topo.yaml,其中:

-

user:“tidb”:表示通过 tidb 系统用户(部署时会自动创建)来做集群的内部管理,默认使用 22 端口通过 ssh 登录目标机器

-

replication.enable-placement-rules:设置这个 PD 参数来确保 TiFlash 正常运行

-

host:设置为本部署主机的 IP

-

配置模板如下:vim topo.yaml

# # Global variables are applied to all deployments and used as the default value of

# # the deployments if a specific deployment value is missing.

global:

user: "tidb"

ssh_port: 22

deploy_dir: "/data/tidb-deploy"

data_dir: "/data/tidb-data"

# # Monitored variables are applied to all the machines.

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

server_configs:

tidb:

log.slow-threshold: 500

tikv:

readpool.storage.use-unified-pool: false

readpool.coprocessor.use-unified-pool: true

pd:

replication.enable-placement-rules: true

replication.location-labels: ["host"]

tiflash:

logger.level: "info"

pd_servers:

- host: 10.0.0.251

tidb_servers:

- host: 10.0.0.251

tikv_servers:

- host: 10.0.0.251

port: 20160

status_port: 20180

config:

server.labels: { host: "logic-host-1" }

- host: 10.0.0.251

port: 20161

status_port: 20181

config:

server.labels: { host: "logic-host-2" }

- host: 10.0.0.251

port: 20162

status_port: 20182

config:

server.labels: { host: "logic-host-3" }

tiflash_servers:

- host: 10.0.0.251

monitoring_servers:

- host: 10.0.0.251

grafana_servers:

- host: 10.0.0.251

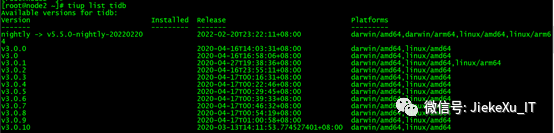

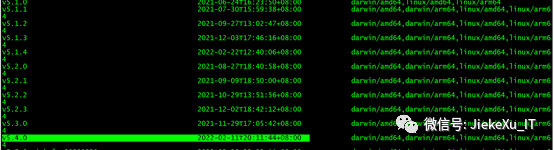

7、使用如下命令查看目前 TiDB 支持的最新版本

tiup list tidb

目前,最新版本为 5.4.0 版本。

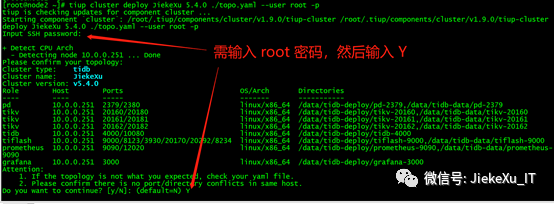

8、执行集群部署命令:

tiup cluster deploy JiekeXu 5.4.0 ./topo.yaml --user root -p

- 参数 表示设置集群名称

- 参数 表示设置集群版本,可以通过 tiup list tidb 命令来查看当前支持部署的 TiDB 版本

按照引导,输入”y”及 root 密码,来完成部署:

Do you want to continue? [y/N]: y

根据个人网速等待下载完成即可。

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.9.0/tiup-cluster /root/.tiup/components/cluster/v1.9.0/tiup-cluster deploy JiekeXu 5.4.0 ./topo.yaml --user root -p

Input SSH password:

+ Detect CPU Arch

- Detecting node 10.0.0.251 ... Done

Please confirm your topology:

Cluster type: tidb

Cluster name: JiekeXu

Cluster version: v5.4.0

Role Host Ports OS/Arch Directories

---- ---- ----- ------- -----------

pd 10.0.0.251 2379/2380 linux/x86_64 /data/tidb-deploy/pd-2379,/data/tidb-data/pd-2379

tikv 10.0.0.251 20160/20180 linux/x86_64 /data/tidb-deploy/tikv-20160,/data/tidb-data/tikv-20160

tikv 10.0.0.251 20161/20181 linux/x86_64 /data/tidb-deploy/tikv-20161,/data/tidb-data/tikv-20161

tikv 10.0.0.251 20162/20182 linux/x86_64 /data/tidb-deploy/tikv-20162,/data/tidb-data/tikv-20162

tidb 10.0.0.251 4000/10080 linux/x86_64 /data/tidb-deploy/tidb-4000

tiflash 10.0.0.251 9000/8123/3930/20170/20292/8234 linux/x86_64 /data/tidb-deploy/tiflash-9000,/data/tidb-data/tiflash-9000

prometheus 10.0.0.251 9090/12020 linux/x86_64 /data/tidb-deploy/prometheus-9090,/data/tidb-data/prometheus-9090

grafana 10.0.0.251 3000 linux/x86_64 /data/tidb-deploy/grafana-3000

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

+ Generate SSH keys ... Done

+ Download TiDB components

- Download pd:v5.4.0 (linux/amd64) ... Done

- Download tikv:v5.4.0 (linux/amd64) ... Done

- Download tidb:v5.4.0 (linux/amd64) ... Done

- Download tiflash:v5.4.0 (linux/amd64) ... Done

- Download prometheus:v5.4.0 (linux/amd64) ... Done

- Download grafana:v5.4.0 (linux/amd64) ... Done

- Download node_exporter: (linux/amd64) ... Done

- Download blackbox_exporter: (linux/amd64) ... Done

+ Initialize target host environments

- Prepare 10.0.0.251:22 ... Done

+ Deploy TiDB instance

- Copy pd -> 10.0.0.251 ... Done

- Copy tikv -> 10.0.0.251 ... Done

- Copy tikv -> 10.0.0.251 ... Done

- Copy tikv -> 10.0.0.251 ... Done

- Copy tidb -> 10.0.0.251 ... Done

- Copy tiflash -> 10.0.0.251 ... Done

- Copy prometheus -> 10.0.0.251 ... Done

- Copy grafana -> 10.0.0.251 ... Done

- Deploy node_exporter -> 10.0.0.251 ... Done

- Deploy blackbox_exporter -> 10.0.0.251 ... Done

+ Copy certificate to remote host

+ Init instance configs

- Generate config pd -> 10.0.0.251:2379 ... Done

- Generate config tikv -> 10.0.0.251:20160 ... Done

- Generate config tikv -> 10.0.0.251:20161 ... Done

- Generate config tikv -> 10.0.0.251:20162 ... Done

- Generate config tidb -> 10.0.0.251:4000 ... Done

- Generate config tiflash -> 10.0.0.251:9000 ... Done

- Generate config prometheus -> 10.0.0.251:9090 ... Done

- Generate config grafana -> 10.0.0.251:3000 ... Done

+ Init monitor configs

- Generate config node_exporter -> 10.0.0.251 ... Done

- Generate config blackbox_exporter -> 10.0.0.251 ... Done

+ Check status

Enabling component pd

Enabling instance 10.0.0.251:2379

Enable instance 10.0.0.251:2379 success

Enabling component tikv

Enabling instance 10.0.0.251:20162

Enabling instance 10.0.0.251:20161

Enabling instance 10.0.0.251:20160

Enable instance 10.0.0.251:20160 success

Enable instance 10.0.0.251:20161 success

Enable instance 10.0.0.251:20162 success

Enabling component tidb

Enabling instance 10.0.0.251:4000

Enable instance 10.0.0.251:4000 success

Enabling component tiflash

Enabling instance 10.0.0.251:9000

Enable instance 10.0.0.251:9000 success

Enabling component prometheus

Enabling instance 10.0.0.251:9090

Enable instance 10.0.0.251:9090 success

Enabling component grafana

Enabling instance 10.0.0.251:3000

Enable instance 10.0.0.251:3000 success

Enabling component node_exporter

Enabling instance 10.0.0.251

Enable 10.0.0.251 success

Enabling component blackbox_exporter

Enabling instance 10.0.0.251

Enable 10.0.0.251 success

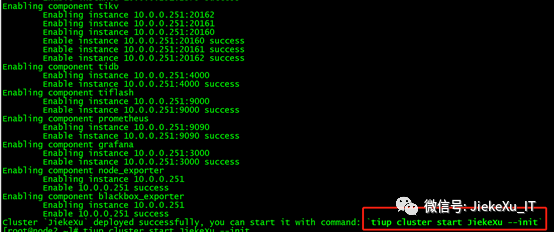

Cluster `JiekeXu` deployed successfully, you can start it with command: `tiup cluster start JiekeXu --init`

9、使用 --init 启动 TiDB集群

tiup cluster start JiekeXu --init

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.9.0/tiup-cluster /root/.tiup/components/cluster/v1.9.0/tiup-cluster start JiekeXu --init

Starting cluster JiekeXu...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/JiekeXu/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/JiekeXu/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [ Serial ] - StartCluster

Starting component pd

Starting instance 10.0.0.251:2379

Start instance 10.0.0.251:2379 success

Starting component tikv

Starting instance 10.0.0.251:20162

Starting instance 10.0.0.251:20160

Starting instance 10.0.0.251:20161

Start instance 10.0.0.251:20161 success

Start instance 10.0.0.251:20162 success

Start instance 10.0.0.251:20160 success

Starting component tidb

Starting instance 10.0.0.251:4000

Start instance 10.0.0.251:4000 success

Starting component tiflash

Starting instance 10.0.0.251:9000

Start instance 10.0.0.251:9000 success

Starting component prometheus

Starting instance 10.0.0.251:9090

Start instance 10.0.0.251:9090 success

Starting component grafana

Starting instance 10.0.0.251:3000

Start instance 10.0.0.251:3000 success

Starting component node_exporter

Starting instance 10.0.0.251

Start 10.0.0.251 success

Starting component blackbox_exporter

Starting instance 10.0.0.251

Start 10.0.0.251 success

+ [ Serial ] - UpdateTopology: cluster=JiekeXu

Started cluster `JiekeXu` successfully

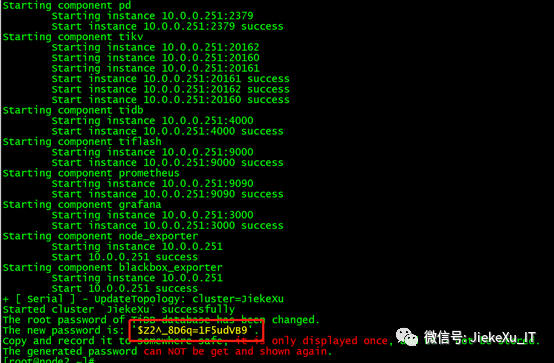

The root password of TiDB database has been changed.

The new password is: '$Z2^_8D6q=1F5udVB9'.

Copy and record it to somewhere safe, it is only displayed once, and will not be stored.

The generated password can NOT be get and shown again.

根据上图 PD、TiKV、TiDB、Tiflash 启动完成,集群启动完成,并初始化完成,显示出 root 用户的密码。

10、访问集群

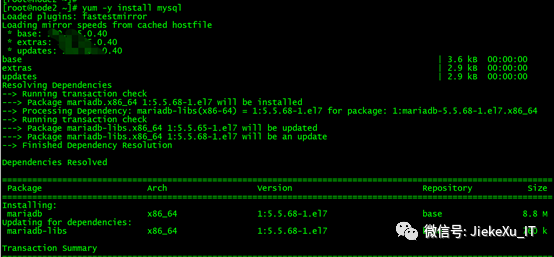

使用 MySQL 客户端连接

安装 MySQL 客户端。如果已安装 MySQL 客户端或者服务端则可跳过这步。

yum -y install mysql

访问 TiDB 数据库,密码为上一步初始化的字符串’$Z2^_8D6q=1F5udVB9’.

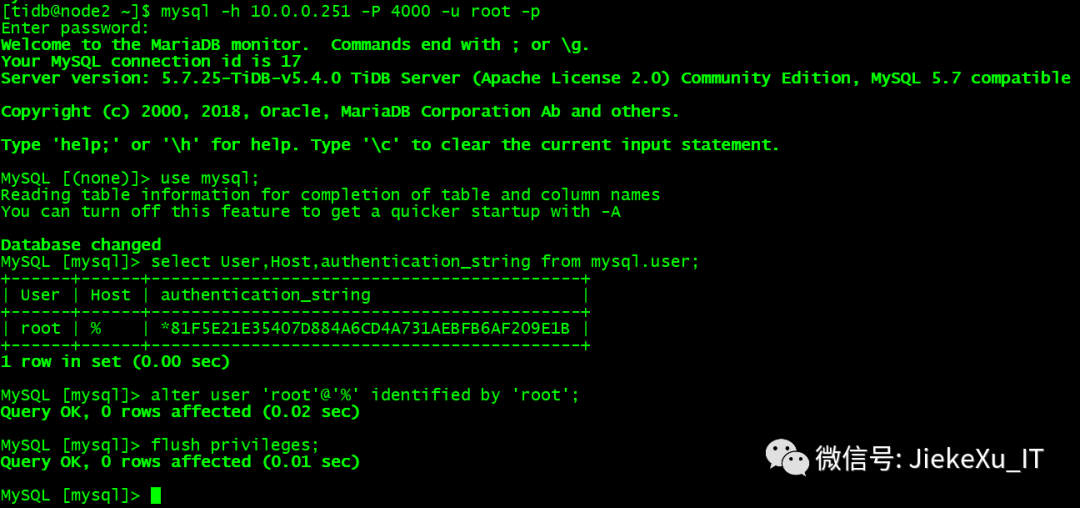

mysql -h 10.0.0.251 -P 4000 -u root -p

初始化时的 root 密码随机的,不利于记忆,这里是学习环境,将其修改“root”,如下所示:

[tidb@node2 ~]$ mysql -h 10.0.0.251 -P 4000 -u root -p

执行以下命令确认当前已经部署的集群列表:

执行以下命令查看集群的拓扑结构和状态:

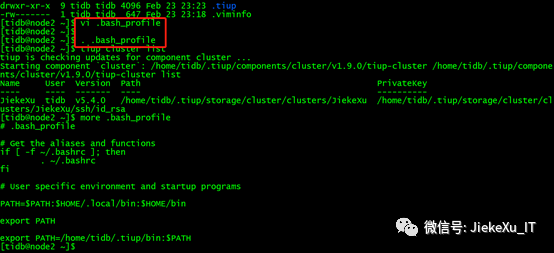

tiup cluster display JiekeXu

最后,由于 tidb 服务是 root 下载、管理的,在部署时创建了 tidb 用户,启动了相关服务,所以,这里我打算将下载的 tiup 软件复制到 tidb 用户家目录下,并配置相应的环境变量。

# cp -r .tiup/ /home/tidb/

# chown -R tidb:tidb /home/tidb/.tiup/

查看集群状态,关闭集群与启动集群

[tidb@node2 ~]$ tiup cluster display JiekeXu

tiup is checking updates for component cluster ...

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.9.0/tiup-cluster /home/tidb/.tiup/components/cluster/v1.9.0/tiup-cluster display JiekeXu

Cluster type: tidb

Cluster name: JiekeXu

Cluster version: v5.4.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://10.0.0.251:2379/dashboard

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

10.0.0.251:3000 grafana 10.0.0.251 3000 linux/x86_64 Up - /data/tidb-deploy/grafana-3000

10.0.0.251:2379 pd 10.0.0.251 2379/2380 linux/x86_64 Up|L|UI /data/tidb-data/pd-2379 /data/tidb-deploy/pd-2379

10.0.0.251:9090 prometheus 10.0.0.251 9090/12020 linux/x86_64 Up /data/tidb-data/prometheus-9090 /data/tidb-deploy/prometheus-9090

10.0.0.251:4000 tidb 10.0.0.251 4000/10080 linux/x86_64 Up - /data/tidb-deploy/tidb-4000

10.0.0.251:9000 tiflash 10.0.0.251 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /data/tidb-data/tiflash-9000 /data/tidb-deploy/tiflash-9000

10.0.0.251:20160 tikv 10.0.0.251 20160/20180 linux/x86_64 Up /data/tidb-data/tikv-20160 /data/tidb-deploy/tikv-20160

10.0.0.251:20161 tikv 10.0.0.251 20161/20181 linux/x86_64 Up /data/tidb-data/tikv-20161 /data/tidb-deploy/tikv-20161

10.0.0.251:20162 tikv 10.0.0.251 20162/20182 linux/x86_64 Up /data/tidb-data/tikv-20162 /data/tidb-deploy/tikv-20162

Total nodes: 8

[tidb@node2 ~]$ tiup cluster stop JiekeXu

tiup is checking updates for component cluster ...

A new version of cluster is available:

The latest version: v1.9.1

Local installed version: v1.9.0

Update current component: tiup update cluster

Update all components: tiup update --all

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.9.0/tiup-cluster /home/tidb/.tiup/components/cluster/v1.9.0/tiup-cluster stop JiekeXu

Will stop the cluster JiekeXu with nodes: , roles: .

Do you want to continue? [y/N]:(default=N) y

+ [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/JiekeXu/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/JiekeXu/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [ Serial ] - StopCluster

Stopping component grafana

Stopping instance 10.0.0.251

Stop grafana 10.0.0.251:3000 success

Stopping component prometheus

Stopping instance 10.0.0.251

Stop prometheus 10.0.0.251:9090 success

Stopping component tiflash

Stopping instance 10.0.0.251

Stop tiflash 10.0.0.251:9000 success

Stopping component tidb

Stopping instance 10.0.0.251

Stop tidb 10.0.0.251:4000 success

Stopping component tikv

Stopping instance 10.0.0.251

Stopping instance 10.0.0.251

Stopping instance 10.0.0.251

Stop tikv 10.0.0.251:20160 success

Stop tikv 10.0.0.251:20162 success

Stop tikv 10.0.0.251:20161 success

Stopping component pd

Stopping instance 10.0.0.251

Stop pd 10.0.0.251:2379 success

Stopping component node_exporter

Stopping instance 10.0.0.251

Stop 10.0.0.251 success

Stopping component blackbox_exporter

Stopping instance 10.0.0.251

Stop 10.0.0.251 success

Stopped cluster `JiekeXu` successfully

[tidb@node2 ~]$ tiup cluster start JiekeXu

tiup is checking updates for component cluster ...

A new version of cluster is available:

The latest version: v1.9.1

Local installed version: v1.9.0

Update current component: tiup update cluster

Update all components: tiup update --all

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.9.0/tiup-cluster /home/tidb/.tiup/components/cluster/v1.9.0/tiup-cluster start JiekeXu

Starting cluster JiekeXu...

+ [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/JiekeXu/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/JiekeXu/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [Parallel] - UserSSH: user=tidb, host=10.0.0.251

+ [ Serial ] - StartCluster

Starting component pd

Starting instance 10.0.0.251:2379

Start instance 10.0.0.251:2379 success

Starting component tikv

Starting instance 10.0.0.251:20162

Starting instance 10.0.0.251:20160

Starting instance 10.0.0.251:20161

Start instance 10.0.0.251:20162 success

Start instance 10.0.0.251:20161 success

Start instance 10.0.0.251:20160 success

Starting component tidb

Starting instance 10.0.0.251:4000

Start instance 10.0.0.251:4000 success

Starting component tiflash

Starting instance 10.0.0.251:9000

Start instance 10.0.0.251:9000 success

Starting component prometheus

Starting instance 10.0.0.251:9090

Start instance 10.0.0.251:9090 success

Starting component grafana

Starting instance 10.0.0.251:3000

Start instance 10.0.0.251:3000 success

Starting component node_exporter

Starting instance 10.0.0.251

Start 10.0.0.251 success

Starting component blackbox_exporter

Starting instance 10.0.0.251

Start 10.0.0.251 success

+ [ Serial ] - UpdateTopology: cluster=JiekeXu

Started cluster `JiekeXu` successfully

由上可以观察到,TiDB集群启动顺序:pd ----> tikv ----> tidb ----> tiflash ----> Prometheus ----> Grafana ----> node_exporter ----> blackbox_exporter

与集群关闭顺序:grafana ----> prometheus----> tiflash ----> tidb----> tikv ----> pd ----> node_exporter ----> blackbox_exporter

TiDB 版本:5.4.0 关键特性

发版日期:2022 年 2 月 15 日,在 v5.4.0 版本中,可以获得以下关键特性:

- 支持 GBK 字符集

- 支持索引合并(Index Merge) 数据访问方法,能够合并多个列上索引的条件过滤结果

- 支持通过 session 变量实现有界限过期数据读取

- 支持统计信息采集配置持久化

- 支持使用 Raft Engine 作为 TiKV 的日志存储引擎(实验特性)

- 优化备份对集群的影响

- 支持 Azure Blob Storage 作为备份目标存储

- 持续提升 TiFlash 列式存储引擎和 MPP 计算引擎的稳定性和性能

- 为 TiDB Lightning 增加已存在数据表是否允许导入的开关

- 优化持续性能分析(实验特性)

- TiSpark 支持用户认证与鉴权

在 v5.4.0 前,TiDB 支持 ascii、binary、latin1、utf8 和 utf8mb4 字符集,为了更好的支持中文用户,TiDB 从 v5.4.0 起支持 GBK 字符集。

索引合并 (Index Merge) 是在 TiDB v4.0 版本中作为实验特性引入的一种查询执行方式的优化,可以大幅提高查询在扫描多列数据时条件过滤的效率。例如对以下的查询,若 WHERE 子句中两个 OR 连接的过滤条件在各自包含的 key1 与 key2 两个列上都存在索引,则索引合并可以同时利用 key1 与 key2 上的索引分别进行过滤,然后合并出最终的结果。

SELECT * FROM table WHERE key1 <= 100 OR key2 = 200;

以往 TiDB 在一个表上的查询只能使用一个索引,无法同时使用多个索引进行条件过滤。相较以往,索引合并避免了此情况下可能不必要的大量数据扫描,也可以使得需要灵活查询不特定多列数据组合的用户利用单列上的索引达到高效稳定的查询,无需大量构建多列复合索引。

还有很多新的特性这里就不展示了,感兴趣的可前往官方文档查看。https://docs.pingcap.com/zh/tidb/stable/release-5.4.0

全文完,希望可以帮到正在阅读的你,如果觉得有帮助,可以分享给你身边的朋友,你关心谁就分享给谁,一起学习共同进步~~~